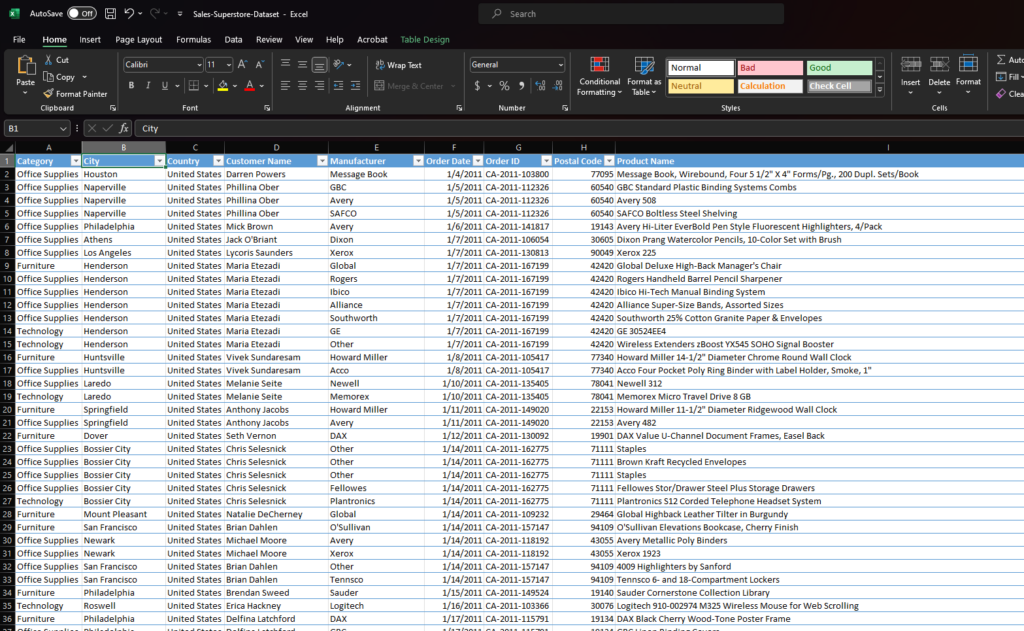

Spreadsheets, like Microsoft Excel and Google Sheets are often the critical first step in data science. Most raw data will be provided or exported in a flat format like a spreadsheet (.xlsx, .csv, etc.).

Before we can begin analyzing data in Python or SQL, or a data visualization tool like Tableau, we need to explore the data and ensure that it is accurate and “clean.” This aspect of data science is known as “pre-processing.”

Pre-processing is the process of cleaning and transforming raw data to ensure that the dataset is in a format that can be easily analyzed and is free from errors, inconsistencies, and missing values.

What does “data cleanliness” mean?

Data cleanliness refers to the quality and accuracy of data. Clean data is free from errors, inconsistencies, and inaccuracies that could impact its usefulness for analysis and decision-making. In order for data to be considered clean, it should be complete, accurate, consistent, and standardized.

This involves ensuring that data is entered correctly, validated regularly, and maintained properly over time. Data cleanliness is an essential aspect of data management and is critical for ensuring that organizations can rely on their data to make informed decisions.

If data is not properly cleaned prior to analysis and visualization, even the most beautiful dashboards will be useless for decision-making.

This dataset from Tableau Public is clean and ready to be analyzed or imported into a data visualization tool like Tableau.

Exploratory Data Analysis (EDA)

Ensuring data cleanliness is a critical step for Exploratory Data Analysis (EDA), an approach to initial data analysis that involves summarizing main characteristics and identifying patterns, trends, relationships, and anomalies.

We’ll explore EDA in more detail in the future.